Partners: Gus Kitchell & Charles Elmer

Overview

This project was my first stab at transcoding – converting one source of information into another format. In this case, we converted biological information (the amino acid sequence of a protein) into an auditory output. The specific protein we chose was the ZENK protein (also known as the Early Growth Response Protein 1, or EGRP1) found in the forebrain of the Zebra Finch (Figure 1), a species that has been studied extensively and for which a great deal of genetic information is available. The ZENK protein is involved in vocal communication, and studies have suggested that its production may be triggered when songbirds hear the songs of other individuals of the same species (Mello and Ribeiro, 1998). For this reason, we saw a parallel between the function of the ZENK protein and the goal of our project.

Converting biological concepts or transcoding biological information into music has been done by a variety of artists – we are certainly not the first to take on this project. However, we feel that the music produced by these projects tends to be rather abstract and cacophonous. Perhaps this should not be a surprise; there is no inherent reason that a sequence of amino acids should magically transform into a chart-topping pot hit. However, Charles and I were still very interested in the idea of protein music, and thought that it might be beneficial to produce a musical piece that is more accessible for modern listeners. In short, we wanted to create something that sounds more like a popular song that you might hear on the radio, while still using biological information as the foundation of our project. Our reasoning was fairly simple: although we were intrigued by the dissonant music produced by past transcoding artists, we found it hard to understand. For the average listener, it can be hard to understand the connection between the dissonant sounds and their biological source material. As a result, they may feel overwhelmed and ultimately uninterested. It is hard to interact with something which you don’t understand. Thus, we sought to produce music that would be more familiar to the average listener, and might spur them to ask questions. What protein sequence did you use to create the bass-line? How can the same sequence be used to create a guitar melody and a drum solo? How is it possible to convert a protein sequence into a catchy song? While these questions still show a general lack of understanding from the listener, we hope that they can at least conceptualize what they are listening to. If we have done our job well (that is, produced a catchy tune), we hope that it will prompt more listeners to engage with the idea of transcoding and protein music. Thus, the ZENK protein, which is involved in vocal communication in the Zebra Finch, seemed like a particularly suitable foundation for our project, which attempts to communicate the concept of protein music to a general audience.

Methodology

We began by downloading the amino acid sequence for the ZENK protein, found on the RCSB Protein Data Bank.

CDRRFSRSDE LTRHIRIHTG QKPFQCRICM RNFSRSDHLT THIRTHTGEK PFACDICGRK FARSDERKRH TKIHLRQKDK KVEKAAPAST ASPIPAYSSS VTTSYPSSIT TTYPSPVRTA YSSPAPSSYP SPVHTTFPSP SIATTYPSGT ATFQTQVATS FSSPGVANNF SSQVTSALSD INSAFSPRTI EIC

(http://www.rcsb.org/pdb/protein/O73693?evtc=Suggest&evta=ProteinFeature%20View&evtl=OtherOptions)

The sequence is listed in the conventional amino acid “single letter code,” which is listed below.

Single Letter Code

- G – Glycine (Gly)

- P – Proline (Pro)

- A – Alanine (Ala)

- V – Valine (Val)

- L – Leucine (Leu)

- I – Isoleucine (Ile)

- M – Methionine (Met)

- C – Cysteine (Cys)

- F – Phenylalanine (Phe)

- Y – Tyrosine (Tyr)

- W – Tryptophan (Trp)

- H – Histidine (His)

- K – Lysine (Lys)

- R – Arginine (Arg)

- Q – Glutamine (Gln)

- N – Asparagine (Asn)

- E – Glutamic Acid (Glu)

- D – Aspartic Acid (Asp)

- S – Serine (Ser)

- T – Threonine (Thr)

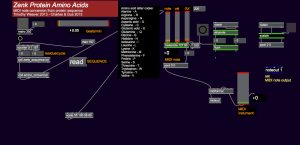

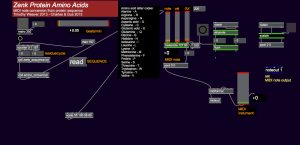

We then ran the amino acid sequence through a MIDI note converted created in MAX by our professor, Tim Weaver. This program reads the single letter code of the amino acid sequence and converts each letter into a MIDI note. Factors such as the volume, duration, note range, and instrument type can all be altered in the MAX patch (Figure 2).

Figure 2. ZENK protein not conversion MAX patch

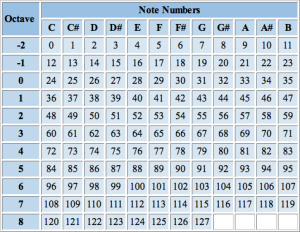

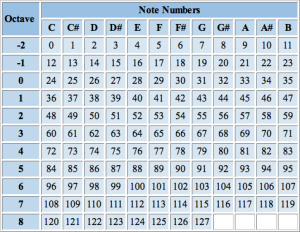

In order to create a melody that sounds more similar to a pop song, we converted each amino acid into a note within a G pentatonic scale. MIDI operates in a 127 note chromatic scale, meaning a random assortment of notes tends to sound a-melodic. Thus, we only assigned amino acids to MIDI notes that fall within a G pentatonic scale. This required us to look up the MIDI note conversion chart (Figure 3).

Figure 3. MIDI note conversion chart.

https://freaksolid.files.wordpress.com/2013/03/midi_note_values.jpg

As noted above, we assigned each amino acid in the ZENK sequence to a note that falls within the G pentatonic scale (G, A, B, D, E, G), beginning with a 2nd octave G (MIDI note #55). The result was a text sequence that looks like the one shown below.

A, 55;

R, 57;

N, 59;

D, 62;

C, 64;

E, 67;

Q, 69;

G, 71;

H, 74;

I, 76;

L, 79;

K, 81;

M, 83;

F, 86;

P, 88;

S, 91;

T, 93;

W, 95;

Y, 98;

V, 100;

However, due to the arrangement of the amino acid sequence, this note assignment still produced consecutive notes that were multiple octaves apart, creating a jumpy and disconnected tune. To correct this, we took a simple shortcut, assigning the same set of notes to multiple amino acids. We selected a single octave, from G to G (#55 to #67), and created the text file shown below.

A, 55;

R, 57;

N, 59;

D, 62;

C, 64;

E, 67;

Q, 55;

G, 57;

H, 59;

I, 62;

L, 64;

K, 67;

M, 55;

F, 57;

P, 59;

S, 62;

T, 64;

W, 67;

Y, 55;

V, 57;

Once our notes had been restricted to a single octave (minimizing the large jumps between consecutive notes), we played the protein sequence and recorded the output in the music-editing program Logic (Figure 4). This allowed us to arpeggiate notes and add a variety of other effects in order to create a more modern sound (Figure 5). We repeated this process using multiple different instrument sounds, then overlaid each track to create a song that involved drums, bass, guitar, and synth, in a variety of octaves and tempos.

Figure 4. Logic screen

Figure 5. Arpeggiator screen (within Logic)

Overall, we found this project to be both difficult and enjoyable. Creating a pop song prom a protein sequence is not an easy process. While our first attempt may not be a #1 hit, we think it is a decent first demonstration of the overlap between protein sequences and popular music, and hope that it will generate new interest in transcoding and protein music.